MAPS: Multi-Agent Production Scheduling

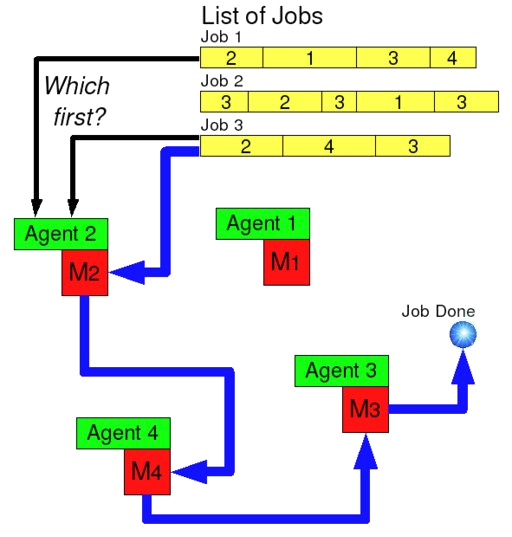

![]() Most approaches to tackle job-shop scheduling

problems assume complete task knowledge and search for a

centralized solution. In this project, we adopt an alternative view

on scheduling problems where each resource is equipped with

an adaptive agent that, independent of other agents, makes

job dispatching decisions based on its local view on the plant

and employs reinforcement learning to improve its dispatching

strategy.

Most approaches to tackle job-shop scheduling

problems assume complete task knowledge and search for a

centralized solution. In this project, we adopt an alternative view

on scheduling problems where each resource is equipped with

an adaptive agent that, independent of other agents, makes

job dispatching decisions based on its local view on the plant

and employs reinforcement learning to improve its dispatching

strategy.

Several extensions are necessary to render this learning approach applicable to job-shop scheduling problems of current standards of difficulty. Besides those challenges, one of the main goals we are pursuing in the scope of this project is to empirically evaluate the strength and the advantages of the methods proposed on established scheduling benchmark problems.

Technical Background

In production scheduling, tasks have to be allocated to a limited number of resources in such a manner that one or more objectives are optimized. Though various classical approaches can be shown to provide optimal solutions to various scheduling problem variants, they typically do not scale with problem size, suffering from an exponential increase in computation time.

In this project we advocate an alternative approach to production scheduling that performs reactive scheduling and is capable of producing approximate solutions in minimal time. Here, each resource is equipped with a scheduling agent that makes the decision on which job to process next based solely on its partial view on the plant. As each agent follows its own decision policy, thus rendering a central control unnecessary, this approach is particularly suitable for environments where unexpected events, such as the arrival of new tasks or machine breakdowns, may occur and, hence, frequent re-planning would be required.

We employ reinforcement learning (RL) to let the scheduling agents acquire their control policies on their own on the basis of trial and error by repeated interaction within their environment. After that learning phase, each agent will have obtained a purposive, reactive behavior for the respective environment. Then, during the application phase, e.g. during application in a real plant, each agent can make its scheduling decisions very quickly by utilizing its reactive behavior.

Multi-Agent Perscpective

In the literature on multi-agent learning, a distinction between

joint-action learners and independent learners is made.

The former can observe their own, as well as the other agents’

action choices. Consequently, in that case the multi-agent

MDP can be reverted to a single-agent MDP with an extended

action set and be solved by some standard method. Here,

however, we concentrate on independent learners because:

In the literature on multi-agent learning, a distinction between

joint-action learners and independent learners is made.

The former can observe their own, as well as the other agents’

action choices. Consequently, in that case the multi-agent

MDP can be reverted to a single-agent MDP with an extended

action set and be solved by some standard method. Here,

however, we concentrate on independent learners because:

- We want to take a fully distributed view on multi-agent scheduling. The agents are completely decoupled from one another, get local state information, and are not allowed to share information via communication.

- Decision-making shall take place in a distributed, reactive manner. Hence, no agent will be aware of the jobs being processed next on other resources.

- The consideration of joint-action learners with global view on the plant would take us nearer to giving all agents the ability to, e.g., construct a disjunctive graph for the scheduling problem at hand and use some classical solution method to solve it. With respect to 1. and 2., this is exactly what we intend to avoid.

We have investigated both, value function-based multi-agent reinforcement learning approaches (see publications [4,5,6]) as well as policy search-based multi-agent reinforcement learning approaches (see publications [1,2,3]). In [4], we have also examined the decision-theoretic foundations of decentralized decision-making in more detail. A comprehensive survey of all approaches can be found in this book.

Publications

- T. Gabel and M. Riedmiller. Distributed Policy Search Reinforcement Learning for Job-Shop Scheduling Tasks. International Journal of Production Research. Accepted.

- T. Gabel and M. Riedmiller. Gradient-Descent Policy Search for Job-Shop Scheduling Problems. Online Proceedings of the 18th International Conference on Automated Planning and Scheduling (ICAPS 2008). AAAI Press, Sydney, Australia, September 2008.

- T. Gabel and M. Riedmiller. Joint Equilibrium Policy Search for Multi-Agent Scheduling Problems. Proceedings of the 6th Conference on Multiagent System Technologies (MATES 2008). Springer, Kaiserslautern, September 2008. pdf

- T. Gabel. and M. Riedmiller. Evaluation of Batch-Mode Reinforcement Learning for Solving DEC-MDPs with Changing Action Sets. Proceedings of the 8th Biennial European Workshop on Reinforcement Learning (EWRL 2008). Springer, Lille, France, June 2008. pdf

- T. Gabel and M. Riedmiller. Adaptive Reactive Job-Shop Scheduling with Reinforcement Learning Agents. International Journal of Information Technology and Intelligent Computing, 24(4), IEEE Press, 2007. pdf

- T. Gabel and M. Riedmiller. Scaling Adaptive Agent-Based Reactive Job-Shop Scheduling to Large-Scale Problems. Proceedings of the IEEE Symposium on Computational Intelligence in Scheduling (CI-Sched 2007). Honolulu, USA, 2007. pdf

People

Researchers involved in this project:

Former members and student assistants:

- Hannes Schulz

- Tobias Springenberg