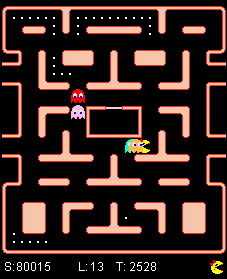

Learning how to play Pac-Man

The Ms. Pac-Man vs. Ghosts Competition provides an easy and fun way for students to experiment with different Machine Learning techniques in order to beat this arcade classic. It consists of a simple Java implementation of the game, leaving open an interface for programming one's own Pac-Man or Ghosts agent. Several groups of our students tried their wits on this competition, with approaches ranging from simple solvers to Neuro-Evolution. Here, we will focus on a simple yet powerful approach tackled by a team of undergraduate students: On-line Q-Learning using Linear Function Approximation for a Pac-Man agent.

The Ms. Pac-Man vs. Ghosts Competition provides an easy and fun way for students to experiment with different Machine Learning techniques in order to beat this arcade classic. It consists of a simple Java implementation of the game, leaving open an interface for programming one's own Pac-Man or Ghosts agent. Several groups of our students tried their wits on this competition, with approaches ranging from simple solvers to Neuro-Evolution. Here, we will focus on a simple yet powerful approach tackled by a team of undergraduate students: On-line Q-Learning using Linear Function Approximation for a Pac-Man agent.

The idea is to assign each state-action pair a Q value. It represents the expected sum of rewards when executing this action in this state. Since the number of states is very big, we cannot learn and store this value for every pair. However, we can approximate it and try to generalize from the trajectories we have seen to face unknown situations. Our linear function approximator does just that: From a state description and an action it approximates the expected Q value. This approximation is learned from the trajectories the agent passed through already. Of course we first have to define the rewards for each state. This is quite simple, e.g. we could use the points gathered in the game step and penalize deaths or idling around (as time is limited).

Features

Even in a fairly simple game like Pac-Man, a complete description of the game state is very complex. For our approximator to work efficiently, we thus have to extract the essence of the state into a set of significant features. Much thought must be put into not leaving out any important information. A great advantage on the other hand is that we can bring prior knowledge into our feature design to manually form non-linear combinations of state information. Now, bringing one's own human intelligence into the way of one's artificial intelligence is generally a step away from real AI or learning. Still, it is unavoidable here due to the limitations of our approach: With just linear combination of basic state variables (such as: there exists a pill at position x), our agent would not be able to learn more abstract tactics.

The design of our features is described in a forthcoming paper.

Learning

Once we determined what awaits Pac-Man in each possible direction, we have to find out how important each feature is for getting rewards (and thus finally for beating the game). This is where the actual learning takes place. Our approximator for the Q function computes a weighted sum of the feature values for each state-action pair. The weights for each of the features are randomly initialized and adapted while playing. Since we can directly see what reward we got for each executed action and calculate the error derivatives, we can adjust the Q values little by little. With a slowly decreasing learning rate and by using random exploration we can assure that the weights will converge on good values. In our experiments, usually they started converging after only a handful games, and it took them about 2000 rounds to effectively reach their final values.

Here you can see an example video of the learning process. Note how the agent first realizes the benefits of pills, only to be scared away by them again after being eaten. Over time it learns that the actual cause of this high penalty are the ghosts alone.

People

While there were many student groups working on different approaches for the game, the approach detailed above including this info page was created by the following students:

- Stefan Bodenlos

- Christof Schötz

- Max Schumacher