Tapir: Modular Vision Toolkit

Tapir is intended as a versatile, easily configurable tool for object detection. It divides the common steps during detection into several classes of modules, which can then be exchanged and mixed to fit any given situation.

Features:

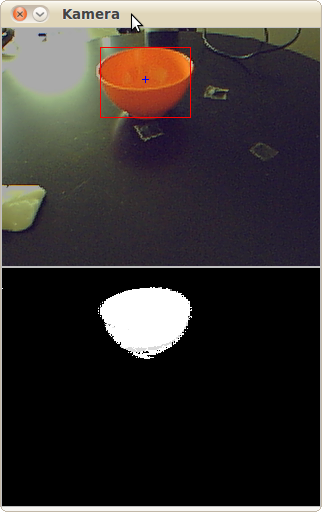

- Provides various object detection methods for use in machine learning.

- Modular design for easy exchanging of cameras, detection methods or output.

- OpenCV-based implementation lightweight enough to be used for robotics applications.

- Provides default interfaces for use with CLS2.

Current detection methods:

- Histogram-based color detection with automatic or manual initialization

- Face detection

- Grid detection for mazes

Supported Platforms

The software is developed and tested under:

- Ubuntu Linux 12.04

- Arch Linux

- Mac OSX 10.8 (10.9 may require installing OpenCv from Git and turning off libCvBlob)

Requirements:

- a C++ compiler

- CMake 2.8.2 or above

Download

The latest software release can be downloaded from here.

Installation

- Get a fresh copy of Tapir

- Unpack the source code

tar xzf tapir.tgz

- Compile the program

mkdir buildcd buildcmake ..make install

Tapir will automatically install a suitable version of OpenCV, preferably from an archive hosted on our own servers. You can also use either the newest version from the OpenCV repositories or whichever version you have installed globally on your system; options to do so will be presented when invoking cmake.

Documentation

The most recent manual is included in the source package, and can be generated using Doxygen by typing make doc while in the build directory.

People

Researchers working on this project:

- Thomas Lampe

Contact

For more information on this research project, please contact Thomas Lampe.