Brainstormers Tribots motor control

Strategy and path planning algorithms usually do geometric calculations assuming that the robot is able to move in all directions with a certain maximal velocity. However, to achieve this movement the three wheels have to be accelerated in a certain way applying an appropriate voltage to the three electric motors of the robot. The transformation between velocities in a robocentric coordinate frame and the wheel velocities is done using the kinematic equations of the robot while the voltage control is done by a motor controller.

While the kinematic equations can easily be solved in a setup with three individual motors, the motor controller design is non-trivial since several goals must be achieved:

- immediate reaction of the motors with small latencies

- precise control

- stable control avoiding oscillations

- robustness with respect to varying floors

The standard solution for these problems are PID-controllers. However, they are limited in their ability to achieve all goals at the same time.

The Tribots' Approach

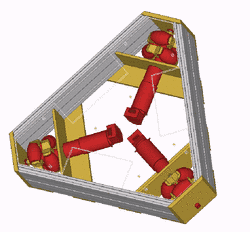

The motor control level of the Brainstormers Tribots is technically based on a motor controller of type Fraunhofer TMC which is connected via CAN-bus with the control computer of the robot. The motors are 90W/24V Maxon DC motors with gear ratio 1:5. The TMC motor controllers are able to control three DC motors. We use them with our own firmware so that we are able to replace the standard PID controllers by our own controllers with higher sampling rate and shorter latencies.

Moreover, to achieve an optimal control of the wheel velocities we replaced the PID-controllers by control strategies that were learned using reinforcement learning. By doing so, we were able to model the desired goals of motor control mentioned above within the reward function of reinforcement learning. Using the NFQ-learning algorithms with multi layer perceptrons for approximating the state-action-value-function the robot was able to generate an appropriate motor controller only by analyzing its own experiences.

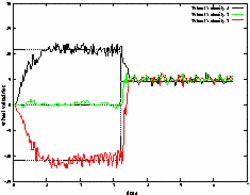

The image shows the desired (dotted lines) and actual wheel velocities (solid lines) of the three wheels using controllers learned with reinforcement learning.