Brainstormers Tribots Vision system

Autonomous robots must be equipped with sensors to be able to interact with their environment in a senseful way. Due to their capability to perceive most of the relevant information and due to the similarity to the human sensing system, camera systems have been developped as main sensors in RoboCup. Earlier approaches with infrared or ultrasonic sensors as well as laser range finders have disappeared due to the limited precision of the former sensing systems and the limited use of the information provided by the latter ones for soccer playing.

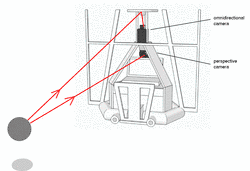

During recent years, a special camera type called omnidrectional camera has had its breakthrough in robot soccer. These cameras are composed out of a standard perspective camera and a bended mirror (e.g. hyperbolic). The mirror is mounted on top of the camera so that the camera image shows the mirror image of the environment. With this kind of technique it is possible to get 360 degree images of the surroundings without pan/tilt mechanics.

The camera images must be processed on a computer system to extract the relevant information about landmarks, the ball, and the other robots on the field. Although the RoboCup environment uses special colors to mark the relevant objects (goals in blue and yellow, ball in orange, field in green, field markings in white, robots in black), image analysis turns out to be one of the most demanding challenges in robot soccer since varying lightning conditions, shadows, spot lights, and unknown environment outside the field (e.g. spectators wearing colored clothes) may mislead approaches based on color segmentation only. Moreover, the special colors are planned to disappear in future.

The Tribots' Approach

The Brainstormers Tribots use an approach based on color classification combined with a bundle of heuristics. Due to limitations in computation time the analysis of the omnidirectional camera images is concentrated on radially arranged rays in the image. Areas of white, black, orange and green pixels are relevant while all the other colors are ignored. The color classes are defined using cubes in the HSI colorspace and an additional split in the YUV colorspace to make the recognition of orange (ball color) more robust. Furthermore, we use automatic shutter and white balance controllers to adapt to different lightning conditions in different areas of the soccer field.

Once having found relevant colored areas, further heuristics are applied to make the recognition more robust. E.g. we already know that white field markings have a certain width and are surrounded by green areas. Having found orange areas in the image a detailed analysis is performed to determine the actual size and the center of the orange spot.

Finally, the coordinates of all objects found are transformed into robocentric coordinates. Thereto, we use a flexible image-to-world mapping based on a lattice map which takes as arguments the angle in which an object is seen and the distance of the object in image coordinates. This image-to-world mapping is calibrated in a special calibration procedure before the tournament.

The vision system of the Brainstormers Tribots is built out of an industrial camera (type Sony DFW V500 or Point Grey Flea-2) and a hyperbolic mirror of type Neovision HS3. The vision system is designed to work with a framerate of 30 Hz on a 1 GHz subnotebook, i.e. all computations need less than 15ms. The camera images have a resolution of 640×480 pixels encoded in YUV 4:2:2.

Tribots' Stereo Vision

The ability of many soccer robots to chip the ball requires a sensor technique that is able to recognize the ball position in three dimensions, i.e. the angle in which the ball is seen, its distance and its height above the ground. This is not possible with a single omnidirectional camera. Therefore, the robots of the Brainstormers Tribots are equipped with a second, perspective camera that takes images of the area in front of the robots.

As long as a ball is in the field of vision of both cameras, its three dimensional position can be calculated following the two lightrays which are given by the image coordinates at which the ball is seen. The ball position is given by the point of intersection of both lightrays. Although the basic principle of stereo vision is simple, its implementation is very tricky. Problems like extending a IEEE1394 driver to work stabely with two cameras, synchronizing both cameras, dealing with erroneously detected orange areas in the camera images, and the limited field of view of both cameras have to be solved.

In RoboCup 2006, the Brainstormers Tribots stereo vision approach has won the Technical Challenge and in 2007 this technique has been used successfully in all matches.