on this page: robot design ∗ control software ∗ downloads

Brainstormers Tribots

The Brainstormers Tribots RoboCup team was established in 2002 and has been the world champion in the RoboCup MiddleSizeLeague in 2006 and 2007. Based on our experiences in the RoboCup simulation league, we started with a student project group at the University of Dortmund under the supervision of Prof. Dr. Martin Riedmiller and moved to the University of Osnabrück in 2003. There, the group consisted of researchers and students from the University of Osnabrück as well as former students. For a list of all members, see here.

The name Brainstormers Tribots is a combination of our simulation team (the Brainstormers) and the midsize team (the Tribots). Our logo (to the right) symbolizes this idea: “Brainstormers Tribots - two classes, one team”.

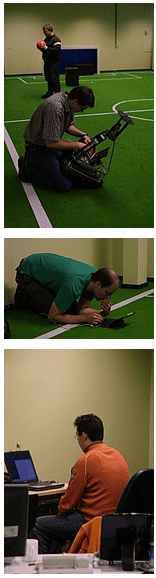

Our robots are based on an omnidirectional drive, an omnidirectional camera, a perspective camera to allow stereo vision, and odometers as sensors. They work completely autonomously. We have successfully participated in many international robotic soccer contests, and we became world champion twice and European champion four times (2004-2007). Read the whole story here and view Pictures of the various tournaments here.

Robot Design

Basically every Tribot consists of an omnidirectional three-wheel chassis, a tri-motor-conroller, a JVC-Laptop and an omnidirectional camera system. A pneumatic kicking device is attached to the front of the robot. Communication between the robots is established by wlan-interfaces.

Control Software

All soccer robots are based on a control software that was completely developed at the University of Osnabrück. It analyses camera images, fuses all kinds of information into a consistent model of the world, creates the robot behavior, and controls the motors. All of these calculations are repeated 30 times per second. The program is robust, reliable, and very quick. It consists of 100,000 lines of code and has been developed since 2003 by more the 20 students and researchers. It is written in C and C++ for Linux.

Vision

First of all, the robot must analyse the camera images to get information about its surroundings. The images themselves are arrays of colored pixels. However, the robot is interested in the objects (like goals, ball, other robots) which are shown in these images, not in the pixels themselves. Therefore, the pictures are searched for areas of characteristic colors. Red areas potentially show the ball, white areas potentially refer to white field markings, and black areas might show other robots. Afterwards, the form and size of all areas are checked. If color, form, and size are adequate, the program assumes an area to refer to a certain object in the world.

After having found all objects, the robot calculates the position of each object in the world. This is done using calibrated cameras, i.e. before a game is started, every robot calculates a table in which it stores the distance at which an object is placed in the world if it is seen in the image at a certain position. Concerning the ball, the determination of its position is more difficult since the ball can be chipped so that it is not always on the ground. To compute its position the robot uses a second camera (stereo vision). By comparing the position at which the ball is seen in both cameras the robot can determine the position as well as the height of the ball.

Read more about the vision System here.

World model

The next step of calculation is to determine the robot's own position. The robot analyses the white field markings which have been recognized in the camera images. It determines the position on the field at which the lines it has seen fit in the best way to the lines it expects to see. For example, if the robot sees a circle of white lines in the camera image, it is very likely that the robot is close to the center circle of the field.

Additionally, the robot calculates its own velocity comparing the latest positions it has calculated from the camera images. Certainly, the robot must also know the position of the ball and its movement. For this, as well, the robot uses the latest positions at which it has seen the ball.

Learn more about our self localization and object tracking mechanisms.

Robot behavior

When all information about the situation on the soccer field is calculated, the robot starts to compute a sensible behavior. The robot knows a variety of behavior patterns, like dribbling, kicking, and defending, that it can combine as building blocks of its strategy. Every behavior pattern is assigned to conditions which describe when it should be executed. For example, a kicking behavior can only be executed if the robot is already close to the ball. Hence “being close to the ball” becomes a precondition of such a behavior pattern. These preconditions are checked for all behavior patterns and finally, one of them is selected that is the best one in the present situation. Sometimes our robots coordinate their activities (via radio transmission), e.g. to play a pass. Then, the player who wants to give a pass announces its intention so that the receiver can already drive to the right position to get the ball. In some situations it can also happen that a defender calls for help to protect the team's own goal.

In a next step of computation, the robot must determine which path it takes to go to its destination. Other robots might block the direct way. The robot calculates the best way to its destination considering all obstacles and the boundaries of the soccer field. Since it cannot observe obstacles that are occluded or far away, it sometimes has to make assumptions which way is better. Many of the robots' behavior patterns are designed by the software programmers . In contrast, others have been learned autonomously by the robot. This was done using reinforcement learning. In the begining the robot does not know how to solve a certain task, e.g. to dribble the ball. But it can try. For every trial the robot is rewarded or punished, depending on the success of the trial. If the performance was good and the robot was able to dribble the ball for a long distance, it will get a high reward. Then, the robot will store the dribbling it performed and will try to become even better, to get even more reward. If the robot is punished for a bad trial, it will avoid this kind of behavior in the next trial to get a reward again. In this way, the robot can learn to optimize its behavior. This kind of learning is motivated in the way humans and animals learn and has been transfered to computers. Of course, rewarding a robot is different from rewarding an animal. Instead of providing tasty food, the robot is just provided some mathematical operand.

Read more about path planning, our cognitive architecture and our self-learning approach to robotic behavior.

Motor control

Finally, the intention of the robot must be executed by the motors. The intention itself only states a direction of movement and a velocity. This information must be transformed to wheel velocities for each of the three wheels (i.e. how fast must the wheels turn so that the robot drives into a certain direction with a certain velocity). Then, the motor controller (an electronic device) determines the right voltage to make the motors turn in the right frequency. Sometimes, the robot wants to kick the ball. Then, it additionally activates the pneumatic kicking device.

More information on our approach to motor control can be found here.

Downloads

Introductory Presentation on the Tribot's Behavior

These slides were first presented during a MidSize workshop in November 2008.

Omnidirectional test images

This is a collection of testimages taken by the omnidirectional camera on top of the robots while driving on a testfield. The field is actually a has 8m width and 5m half-length. The images are stored in JPEG format. To read-in theses images you can use the JPEGIO-class from the 2005 German Open code (see below).

German Open 2005 Code

To improve the general level of play in the Robocup Midsize League we decided to publish the code which we used in the German Open 2005 competition. Click here for more information.

Publications

2010

2009

- Martin Lauer, Martin Riedmiller (2009) Participating in Autonomous Robot Competitions: Experiences from a Robot Soccer Team. Bibtex

2008

2007

- Heiko Müller, Martin Lauer, Roland Hafner, Sascha Lange, Martin Riedmiller (2007) Making a Robot Learn to Play Soccer Using Reward and Punishment. In Proceedings of the German Conference on Artificial Intelligence, KI 2007. Osnabrück Germany. Bibtex

2006

- S. Lange, M. Riedmiller (2006) Appearance Based Robot Discrimination using Eigenimages. In In Proceedings of the RoboCup Symposium 2006. Bremen, Germany. Bibtex

2005

- M. Lauer, S. Lange, M. Riedmiller (2005) Calculating the Perfect Match: An Efficient and Accurate Approach for Robot Self-Localisation. In RoboCup-2005: Robot Soccer World Cup IX, LNCS. Springer. Bibtex

2004

- Enrico Pagello, Emanuele Menegatti, Daniel Polani, Ansgar Bredenfel, Paulo Costa, Thomas Christaller, Adam Jacoff, Martin Riedmiller, Alessandro Saffiotti, Elizabeth Sklar, Takashi Tomoichi (2004) RoboCup-2003: New Scientific and Technical Advances. AI Magazine American Association for Artificial Intelligence (AAAI). Bibtex

- S. Lange, M. Riedmiller (2004) Evolution of Computer Vision Subsystems in Robot Navigation and Image Classification Tasks. In RoboCup-2004: Robot Soccer World Cup VIII, LNCS. Springer. Bibtex

2003

- M. Arbatzat, S. Freitag, M. Fricke, R. Hafner, C. Heermann, K. Hegelich, A. Krause, J. Krüger, M. Lauer, M. Lewandowski, A. Merke, H. Müller, M. Riedmiller, J. Schanko, M. Schulte-Hobein, M. Theile, S. Welker, D. Withopf (2003) Creating a Robot Soccer Team from Scratch: the Brainstormers Tribots. In CD attached to Robocup 2003 Proceedings, Padua, Italy. Bibtex

- M. Riedmiller, A. Merke, W. Nowak, M. Nickschas, D. Withopf (2003) Brainstormers 2003 - Team Description. In CD attached to Robocup 2003 Proceedings, Padua, Italy. Bibtex

2001

- A. Merke, M. Riedmiller (2001) Karlsruhe Brainstormers - A Reinforcement Learning Way to Robotic Soccer II. In RoboCup-2001: Robot Soccer World Cup V, LNCS. pp. 322-327. Springer. Bibtex

2000

- M. Riedmiller, A. Merke, D. Meier, A. Hoffmann, A. Sinner, O. Thate, C. Kill, R. Ehrmann (2000) Karlsruhe Brainstormers - A Reinforcement Learning Way to Robotic Soccer. In RoboCup-2000: Robot Soccer World Cup IV, LNCS. Springer. Bibtex

- S. Buck, M. Riedmiller (2000) Learning Situation Dependent Sucess Rates Of Actions In A RoboCup Scenario. In Proceedings of PRICAI '00. pp. 809. Melbourne, Australia. Bibtex

1999

- M. Riedmiller, S. Buck, A. Merke, R. Ehrmann, O. Thate, S. Dilger, A. Sinner, A. Hofmann, L. Frommberger (1999) Karlsruhe Brainstormers - Design Principles. In RoboCup-1999: Robot Soccer World Cup III, LNCS. Springer. Bibtex