Approximate Transition Graphs (ATG)

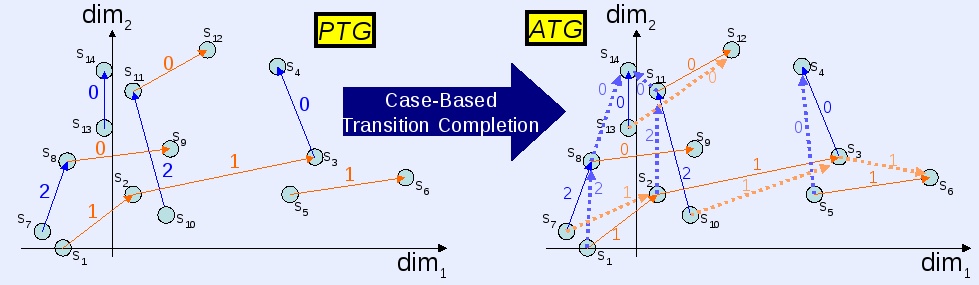

![]() Aiming at fast and efficient learning, batch-mode reinforcement learning (RL) methods store all transition

tuples in an experience set. On the one hand, the ATG projects follows this idea of batch-mode RL and exploit the set of stored experience to construct an approximate

state transition graph for the respective environment. On the other hand,

the ATG approach utilizes case-based reasoning and employs k-nearest neighbor techniques to gain an approximated state-action value

function for a continuous state space from which to infer a near-optimal policy.

Aiming at fast and efficient learning, batch-mode reinforcement learning (RL) methods store all transition

tuples in an experience set. On the one hand, the ATG projects follows this idea of batch-mode RL and exploit the set of stored experience to construct an approximate

state transition graph for the respective environment. On the other hand,

the ATG approach utilizes case-based reasoning and employs k-nearest neighbor techniques to gain an approximated state-action value

function for a continuous state space from which to infer a near-optimal policy.

Technical Background

Initially, a model-free reinforcement learning agent is clueless about state transitions that may occur when taking specific actions, as well as about the cost structure of the environment. During ongoing learning, however, it gains more experience and competence which it must utilize as smartly as possible in order to develop a good behavior policy.

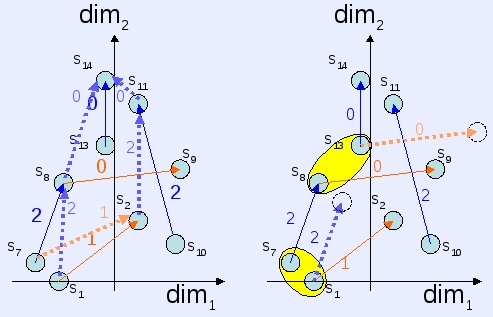

Our learning approach first creates an approximate transition graph (ATG) from its experience using case-based techniques and, second, performs RL on the ATG in order to finally induce a sample-based policy that features near-optimal performance.

Core to the approach is an efficient exploration mechanism. Choosing actions epsilon-greedily during learning means that the agent picks an arbitrary action with probability epsilon. Instead of doing that, the selection of an explorative action may also be guided by the experience the agent has made so far. We have developed an efficient exploration strategy that is conducive for the construction of an approximate transition graph.

A second important feature of the ATG approach is to incorporate the idea of transformational analogy (TA) into the learning algorithm. TA means that a successful solution (or action) used in a similar situation is

transformed into a new solution (action) for the current situation. This basic This idea can easily be integrated into the construction of ATGs.

A second important feature of the ATG approach is to incorporate the idea of transformational analogy (TA) into the learning algorithm. TA means that a successful solution (or action) used in a similar situation is

transformed into a new solution (action) for the current situation. This basic This idea can easily be integrated into the construction of ATGs.

For the purpose of evaluating our case-based approach to state-action value function approximation with ATGs, we have turned to two classical reinforcement learning benchmarks, the pole and the cart pole benchmark problems. We have achieved empirical results that were hardly surpassed by any other contemporary reinforcement learning methods.

Our paper on “An Analysis of Case-Based Value Function Approximation by Approximating State Transition Graphs” was a nominee for the Best Paper Award at the International Conference on Case-Based Reasoning 2007.

- T. Gabel and M. Riedmiller. An Analysis of Case-Based Value Function Approximation by Approximating State Transition Graphs. Proceedings of the 7th International Conference on Case-Based Reasoning (ICCBR 2007). Springer, Belfast, UK, 2007. pdf

People

Researchers involved in this project: